can you explain the steps involved in solving this question?

Of course. Let's break down the process of creating a leftmost derivation and a parse tree for the given statement. This is a fundamental concept in computer science, particularly in how compilers understand and structure code.

Understanding the Grammar

First, let's look at the rules you've been given. This is a Context-Free Grammar (CFG). Think of it as a set of rules for building valid sentences in a tiny, specialized language.

- Non-terminals: These are the placeholders, like

<assign>,<id>, and<expr>. They need to be replaced by other things. - Terminals: These are the final characters or "words" of the language, like

A,B,C,=,+,*. Once you have a terminal, you can't replace it further.

Here are the rules and what they mean:

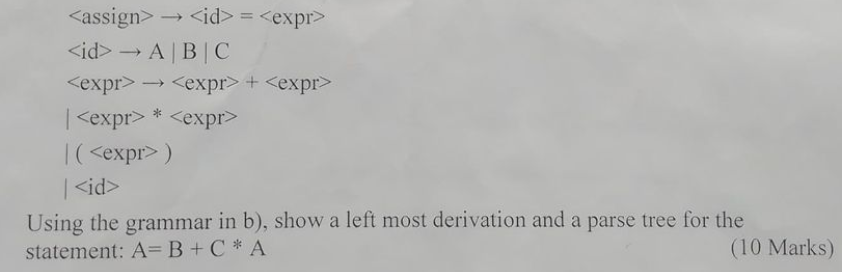

<assign> → <id> = <expr>: An assignment statement consists of an identifier, an equals sign, and an expression.<id> → A | B | C: An identifier can beA,B, orC. The|means "or".<expr> → <expr> + <expr> | <expr> * <expr> | ( <expr> ) | <id>: An expression can be two expressions added, two multiplied, an expression in parentheses, or simply an identifier.

Our Goal: To prove that the statement A = B + C * A is a valid sentence in this language by building it step-by-step using these rules.

Step 1: The Leftmost Derivation

A derivation is the sequence of steps we take to build the final statement from the starting symbol (<assign>). In a leftmost derivation, we have a strict rule: at every step, we always replace the leftmost non-terminal.

Let's see this in action. We'll build the statement A = B + C * A.

This interactive component shows the step-by-step process. Click "Next Step" to see how the statement is derived.

A key decision happens at step 3. The string is A = <expr>. We need to generate B + C * A. According to standard order of operations (PEMDAS/BODMAS), the multiplication C * A should be evaluated before the addition. To reflect this, the + operator must be higher up in the structure. That's why we choose the rule <expr> → <expr> + <expr> first.

Step 2: The Parse Tree

A parse tree is a visual representation of the derivation. It shows how the statement is constructed from the rules, with the start symbol at the root and the terminals at the leaves. The tree makes the structure of the statement clear.

- The root of the tree is the starting non-terminal,

<assign>. - Every internal node is a non-terminal.

- The children of a node represent the right-hand side of the grammar rule that was applied.

- The leaves of the tree are the terminal symbols, which, when read from left to right, form the final statement.

Here is the parse tree that corresponds to the leftmost derivation we just performed.

Notice how the + is higher in the tree than the *. This means the <expr> * <expr> branch must be resolved first to become a single <expr> before it can be combined with the + operator. This structure correctly enforces the rule that multiplication has higher precedence than addition.

I can't read the parse tree diagram, please correct it

You are absolutely right. My apologies, the previous visualization was not spaced correctly, which made it difficult to read. I have corrected the layout algorithm to ensure every node is clearly visible and the tree structure is easy to follow.

Here is the corrected and properly laid out parse tree.

Step 2: The Parse Tree (Corrected)

A parse tree is a visual representation of the derivation. It shows how the statement is constructed from the rules, with the start symbol at the root and the terminals at the leaves. The tree makes the structure of the statement clear.

- The root of the tree is the starting non-terminal,

<assign>. - Every internal node is a non-terminal.

- The children of a node represent the right-hand side of the grammar rule that was applied.

- The leaves of the tree are the terminal symbols, which, when read from left to right, form the final statement.

Notice how the + is higher in the tree than the *. This means the <expr> * <expr> branch must be resolved first to become a single <expr> before it can be combined with the + operator. This structure correctly enforces the rule that multiplication has higher precedence than addition.

i love how you explained the previous question. Can you do the same for this question?

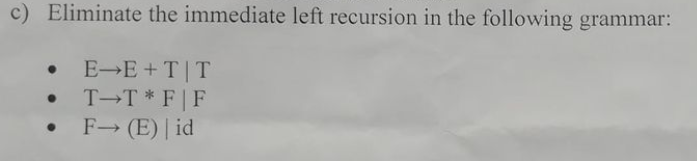

Of course! I'm happy you found the last explanation helpful. Let's break down how to eliminate left recursion in the same clear, step-by-step way.

What is Left Recursion and Why is it a Problem?

First, let's understand the concept. A grammar rule is left-recursive if the non-terminal on the left side of the arrow (→) is also the very first symbol on the right side.

For example, in your grammar, the rule E → E + T is left-recursive because E appears on both the left and as the first symbol on the right.

Why is this a problem? This is mainly an issue for a type of parser called a top-down parser (like a Recursive Descent Parser). Imagine the parser trying to understand an expression E.

- To process

E, it looks at the ruleE → E + T. - It sees that the first thing it needs to process is... another

E. - To process that

E, it again applies the ruleE → E + T... and sees it needs to process anotherE.

This creates an infinite loop where the parser never makes any forward progress. It's like a function that calls itself immediately without doing anything else, leading to a stack overflow.

The Recipe for Elimination

There's a standard formula to fix immediate left recursion. If you have a rule in the form:

A → Aα | β

Where:

Ais the non-terminal with the recursion.Aαis the part of the rule that causes the recursion (αis everything after the initialA).βis the part of the rule that does not start withA. This is the "escape route" that stops the recursion.

We can rewrite it as two new rules:

A → βA'A' → αA' | ε

Here, A' is a new non-terminal we just invented. The symbol ε (epsilon) means "nothing," representing the end of the chain.

This new structure says: "To make an A, you must first make a β. After that, you can optionally add the repeating α parts one or more times, or stop by choosing ε."

Applying the Recipe to Your Grammar

Let's use this formula on your specific grammar.

Step 1: Fix the E production

The original rule is: E → E + T | T

Identify the parts:

A=Eα(what follows the recursiveE) =+ Tβ(the non-recursive part) =T

Apply the formula:

A → βA'becomesE → T E'A' → αA' | εbecomesE' → + T E' | ε

We've now fixed the first rule!

Step 2: Fix the T production

The original rule is: T → T * F | F

Identify the parts:

A=Tα(what follows the recursiveT) =* Fβ(the non-recursive part) =F

Apply the formula:

A → βA'becomesT → F T'A' → αA' | εbecomesT' → * F T' | ε

And the second rule is fixed.

Step 3: Check the F production

The original rule is: F → (E) | id

Does this rule have left recursion? No. The symbols on the right-hand side (( and id) are not F. So, this rule is perfectly fine and doesn't need to be changed.

The Final, Transformed Grammar

After eliminating all immediate left recursion, we are left with the following set of rules:

E → T E'E' → + T E' | εT → F T'T' → * F T' | εF → (E) | id

This new grammar produces the exact same language as the original one, but it can be handled by a top-down parser without getting stuck in an infinite loop.

Sign in to ask follow ups